If you’re buying projectors for your own brand, you’re not buying a gadget—you’re buying a system that has to behave predictably in real rooms, across thousands of units, with customers who will judge you on setup time and stability more than on any spec sheet. That’s why DLP projector engineering and selection is not a “technical appendix” to sourcing; it is the sourcing strategy. Get the fundamentals right—brightness measurement, throw geometry, focus and correction behavior, motion processing, and production consistency—and your product line scales with fewer returns, fewer disputes, and fewer late-night calls from the channel.

This guide is written for B2B readers: product managers, procurement teams, distributors, and integrators who want a clear decision path and practical verification steps. It stays focused on engineering factors that influence outcomes you can measure and contract around. It also connects those factors to what an OEM/ODM partner must be able to build, validate, and support.

What B2B Buyers Actually Need: Predictability, Not Promises

A consumer buyer might accept a projector that looks good “most of the time.” A B2B buyer can’t. Your reseller can’t ship “most of the time,” and your support team can’t handle a vague product definition.

In practice, B2B outcomes hinge on four questions that don’t show up in glossy marketing copy. First, can the projector hit a credible brightness level under a defined method, so you don’t fight about lumens after delivery. Second, can it fit typical rooms, meaning the throw ratio matches the way people actually place the unit. Third, can it focus and correct reliably, especially when users put it off-center, tilt it, or move it between locations. Fourth, can it keep working smoothly after months of use, when apps update and storage fills, and the novelty wears off.

Those questions are the spine of this hub. Everything else is secondary.

Brightness That Holds Up in the Real World: ANSI, ISO, and the Contract Clause

Brightness is the most argued-about projector spec because it is both important and easy to misunderstand. You’ll see different lumen labels in the market, but for B2B, the real issue is not which label you prefer—it’s whether you can reproduce the number, using a shared method, under conditions you can describe in writing.

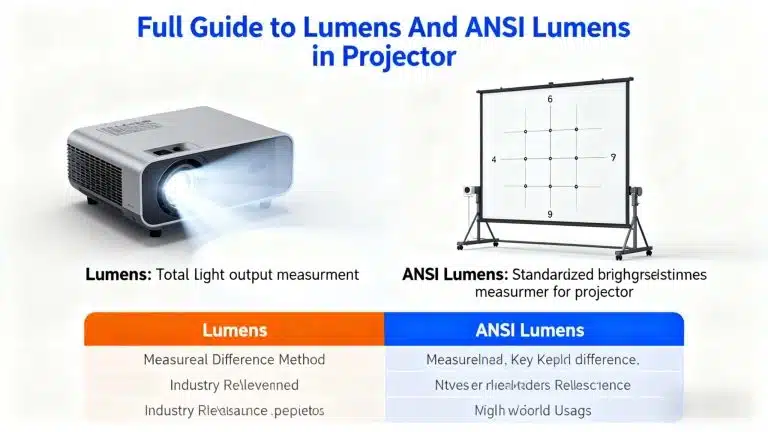

What “ANSI Lumens” Means for Acceptance Testing

ANSI lumens is commonly associated with a standardized approach that averages measurements taken at multiple points across the projected image. You can think of it as an attempt to move brightness claims from storytelling into repeatable testing. The basic concept is a multi-point brightness measurement on a white field, typically using a grid such as a nine-point pattern, followed by an averaging step.

For procurement, the value is straightforward: it gives both sides a shared baseline. If you use ANSI lumens language in an RFQ, then the next step is to define exactly how you will verify it. Without that, “ANSI” becomes a word that still ends in a dispute.

ISO Lumens and the “Conversion Trap”

ISO-based brightness measurement is also used in the industry. The problem is that buyers sometimes treat ISO and ANSI as interchangeable labels and try to convert between them casually. That’s risky. Even when both labels are applied legitimately, differences in test conditions and procedures can lead to different numeric outcomes.

The safe move is to pick one method for contractual acceptance, state it clearly, and test accordingly. If your channel expects ANSI-style validation, anchor the acceptance criteria there. If your market norms use ISO, anchor there. The key is alignment, not ideology.

The Most Useful B2B Brightness Tool: Acceptance Criteria You Can Recreate

Here is what a serious brightness acceptance clause typically includes, expressed in plain language rather than legalese: the measurement method, the screen size, the throw distance, the ambient light condition, the image mode, the warm-up time, and the tolerance window for pass/fail. When those items are defined, the argument turns into a test, not a debate.

This is also where content can do real work for lead generation without feeling salesy. If your blog includes a downloadable “brightness acceptance criteria template” that a buyer can paste into an RFQ, it becomes a practical asset. People save it, forward it, and come back when they are ready to source. It also positions your brand as the one that speaks the language of procurement, not hype.

Throw Ratio and Room Fit: The Geometry That Controls Returns

Brightness attracts attention, but throw ratio controls whether a product fits the rooms it is sold into. If a projector doesn’t fit typical placement distances, customers will blame the product even if it performs perfectly within its design assumptions.

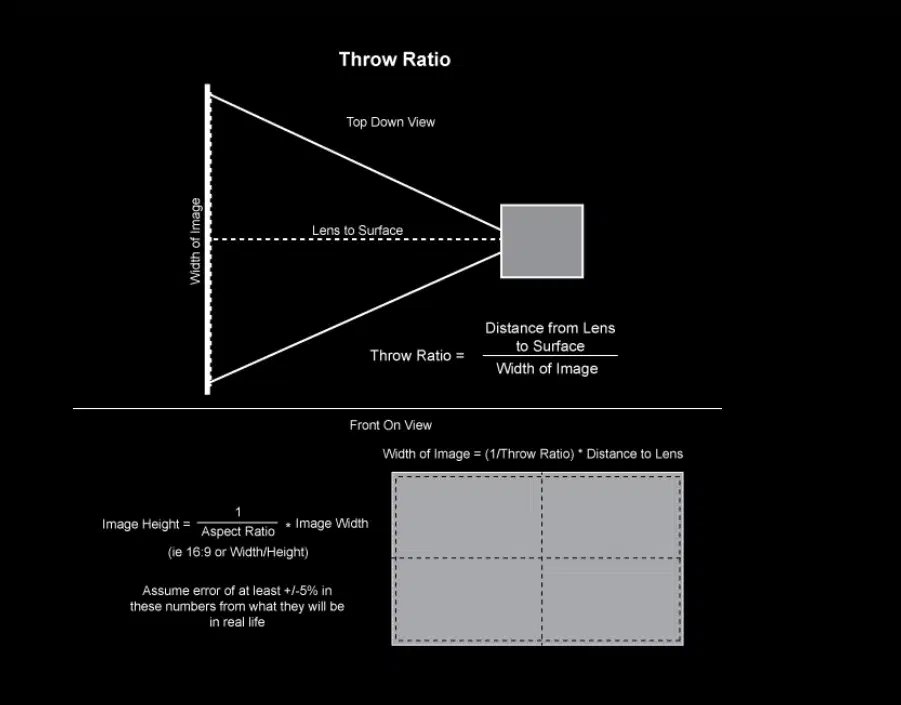

Throw ratio is simply throw distance divided by image width. It tells you how large an image you can create from a given distance. For integrators, it determines whether an installation is possible. For distributors, it determines whether the product suits typical consumer rooms and common screen sizes.

A Practical Example That Prevents Installation Mistakes

If a projector has a 1.2:1 throw ratio and your usable throw distance is 12 feet, you can estimate image width at about 10 feet, because width is distance divided by throw ratio. The actual delivered image will still depend on lens design and tolerances, but this quick calculation prevents “it doesn’t fit” surprises.

In B2B buying, you want these calculations embedded into your process. The most effective format is a simple throw distance calculator that converts room depth into a range of achievable image sizes. If you publish that calculator as a downloadable spreadsheet or a lightweight web tool, you give buyers something they can use immediately, and you keep them on your site longer.

Standard Throw vs. Ultra Short Throw: The Trade-off Is Not Just Distance

Ultra short throw, often shortened to UST, is attractive because it allows a large image from a very short distance. It also changes the nature of the installation. With UST, small alignment errors can become big visual issues, and surface flatness matters more because the light hits the wall at a steeper angle.

From an engineering standpoint, a buyer should treat UST as a distinct category with its own acceptance checklist. That checklist should include leveling, distance ranges, edge sharpness evaluation, and limits for digital correction. If you sell UST through a channel without a strong setup guide, you’re basically choosing higher support costs later.

DMD Size as a Platform Signal: What Buyers Should Actually Infer

In DLP systems, the DMD format is often discussed in terms of physical size variants used across the market. The details can get technical fast, but for B2B selection the most useful approach is to treat DMD size as a platform signal rather than a bragging point.

A compact platform designed for portability often has different constraints than a platform designed for higher brightness or larger images. Those constraints affect optical throughput, thermal headroom, lens design space, and cost structure.

How to Use DMD Size Without Over-simplifying It

The wrong way to use DMD size is to assume a single dimension tells you the whole story. The right way is to ask what design targets are realistic for the platform: intended brightness class, expected image size, portability requirements, and correction feature expectations. DMD size becomes meaningful when you connect it to those targets.

For a B2B reader, the value is decision clarity. If your brand wants a premium portable experience with fast setup, you may prioritize focus speed and correction reliability over pushing brightness into a tier the platform is not meant to sustain. If your brand wants a higher-brightness product for mixed-use environments, you may prioritize thermal stability and optical headroom.

In this hub, the practical takeaway is simple: pick the platform that matches your real use case, then build features around it. Don’t try to force an “everything product” onto a platform that will struggle to deliver the experience your market expects.

Autofocus That Feels Premium: ToF, Repeatability, and Edge Cases

Autofocus is one of those features that can transform a user’s perception, especially for portable use. But it also becomes a liability when it is inconsistent, slow, or prone to “hunting” behavior.

Time-of-Flight autofocus is commonly used to estimate distance quickly and trigger focus adjustments without needing a visible focus pattern on the screen. For users, that can feel seamless. For buyers, the questions are more practical: how quickly does it lock, how repeatable is it, and how does it behave in less-than-perfect conditions.

How to Evaluate Autofocus Like a Buyer, Not a Reviewer

A reviewer might test autofocus once and call it “fast.” A buyer should test it the way real users behave. Move the projector between two distances repeatedly. Change wall color or surface reflectivity. Add ambient light. Place the unit slightly off-axis. Watch whether it lands on focus and stays there.

The best output of this testing is not a sentence; it’s a threshold. For example, “refocus within X seconds after movement and remain readable at Y font size on a 100-inch image.” When you define autofocus performance that way, you can put it into acceptance criteria and reduce subjective arguments.

Autofocus Is Not Alone: It Must Work With Correction

In real setups, autofocus interacts with keystone correction, screen alignment, and obstacle handling. If these features fight each other, setup becomes unpredictable. If they cooperate, setup becomes fast and reliable.

That’s why it matters when an OEM/ODM partner can deliver not just hardware, but integrated behavior across the feature set. If you want to understand what is typically packaged under OEM/ODM services for smart correction features and platform customization, review Toumei OEM/ODM Projector Services. This page outlines customization scope across structure, electronics, OS, and intelligent interaction functions, which is the kind of capability B2B buyers look for when building a stable product line.

Keystone Correction, Screen Alignment, and Obstacle Handling: Smart vs. Fussy

Digital correction features are often described as “automatic” and “smart,” but for B2B, the difference between “smart” and “fussy” is the difference between repeat sales and return waves.

Automatic keystone correction can save time when a user places the projector off-center. But keystone is not free; it can reduce effective resolution and can soften the image, especially when applied aggressively. Screen alignment features can help a user hit a projection surface quickly, but they need to be accurate and stable, not constantly adjusting.

Obstacle handling is also a real-world feature, not a demo trick. Users place projectors on cluttered surfaces. A partial obstruction can happen easily. A good system handles it gracefully and guides the user to a fix.

From a content strategy standpoint, this is a strong differentiator because many top-ranking pages talk about these features in generic terms. You can outperform them by explaining how to test these functions and by giving readers an installation checklist that reduces failures.

Motion Processing: MEMC That Helps Without Creating New Problems

Motion processing, often referred to as MEMC motion compensation, is used to reduce judder and improve perceived smoothness in certain content types. For sports and fast motion, it can make a projector feel more “premium.” For other scenarios, it can introduce artifacts that look unnatural. It may also affect latency, which matters in interactive use cases.

A strong B2B approach is to treat MEMC as a mode-dependent feature. When you position it that way, you reduce misaligned expectations. Buyers can market “smooth sports mode” and also provide a “low-latency mode” for responsiveness. That clarity prevents customer disappointment.

If you want to make this section actionable, the best tool is a short “motion mode policy” that your brand can adopt: what the default mode is for out-of-box viewing, what mode is recommended for sports, and what mode is recommended for low-latency use. This keeps both marketing and support aligned.

3D Support and Compatibility: What You’re Really Signing Up For

When a projector claims 3D support, B2B buyers inherit a support surface area. Customers will ask what glasses are compatible, what formats work, and what to do when they see ghosting or sync issues.

For that reason, 3D is a feature that should come with a compatibility approach. Even if you don’t publish a full compatibility matrix, you should have one internally. If 3D is central to a channel strategy, you may want a short setup guide and a simple troubleshooting flow that addresses the most common complaints.

This is another content opportunity. A high-quality “3D troubleshooting guide” that stays brand-neutral, avoids competitor comparisons, and focuses on user symptoms can capture long-tail searches while reducing the burden on your support team.

OS Stability and Streaming App Compatibility: The Buyer’s Hidden Risk

For a “smart projector,” the operating system and memory/storage configuration are part of the user experience. Performance that feels acceptable in a factory check can feel sluggish after months of real-world use, especially when apps update and caches grow.

For B2B, the safest way to specify this is to avoid vague labels and define what matters: OS category, update approach, memory/storage class, and whether the platform supports the content and DRM environment your target market expects. This is not about naming specific streaming services. It’s about ensuring the product’s software stack matches how customers will use it.

If your product strategy involves different software options by channel, that’s typically handled through OEM/ODM customization: branded UI, preloads, regional settings, update policy, and stability testing. Those are exactly the areas where a capable OEM/ODM partner can reduce risk.

The B2B Decision Framework: From “Specs” to “Signed PO”

At some point, a hub has to help people make a decision. The most useful B2B decision framework connects four layers: target scenario, measurable requirements, verification method, and delivery capability.

Start with the scenario. Is the product intended for small rooms, frequent movement, mixed lighting, or project installations? Then translate that into measurable requirements such as brightness acceptance criteria, throw ratio fit, autofocus speed thresholds, correction behavior under defined placement conditions, and motion mode policy.

Next, define verification. You don’t need an overcomplicated lab. You need repeatable testing that can be replicated by both sides. If your procurement team can test the same way the factory tests, you reduce disputes. If your channel can test the same way your procurement team tests, you reduce returns.

Finally, connect this to delivery capability. If you’re buying a standard product portfolio, the portfolio should be easy to review and compare. If you’re building a customized SKU, you need a partner who can integrate optics, electronics, OS, and intelligent functions into a stable whole.

If you want a top-level view of available DLP projector categories and portfolio structure as a starting point for platform selection, use Toumei DLP Projector Portfolio. It’s a practical entry point when you’re narrowing down direction before a deeper technical discussion.

Common Failure Modes and How to Prevent Them Before Launch

The most expensive mistakes in projector programs are predictable, which is good news because predictable mistakes are preventable.

One common failure mode is unclear brightness definition. A product ships, the channel markets it aggressively, and then returns spike because the end-user environment is brighter than expected. The fix is not to argue about lumens; it’s to define acceptance criteria and align marketing language to realistic use.

Another failure mode is room-fit mismatch. If your channel sells into smaller rooms but the throw ratio requires more distance, customers will struggle. The fix is to use a throw ratio calculator and design channel training around room depth and screen size.

A third failure mode is “smart feature instability.” Autofocus and correction work in demos but fail in messy rooms. The fix is to test in messy rooms before launch, define pass/fail thresholds, and tune default settings to reduce self-inflicted problems.

A fourth failure mode is long-term software slowdown. A product feels responsive on day one, then becomes sluggish. The fix is to test the OS under realistic conditions and define update policy and memory/storage requirements that match your channel.

A hub like this should not just explain these issues. It should show buyers how to avoid them with tools and templates: acceptance criteria, checklists, and test scripts. Those assets also build trust because they demonstrate you understand the procurement reality, not just the engineering vocabulary.

Shenzhen Toumei Technology Co., Ltd.: Company Snapshot

Shenzhen Toumei Technology Co., Ltd. was established in 2013 in Shenzhen, China, and describes itself as an early high-tech enterprise in China focused on DLP smart projection and 3D imaging solutions, integrating research, production, and sales. The company also highlights an IP portfolio of more than 50 patents and describes OEM solutions that cover areas such as optical design, software and hardware development, structural engineering, mold creation, assembly, and testing, supported by dedicated R&D, manufacturing, and after-sales teams. For readers who want the official company background in one place, see About Shenzhen Toumei Technology Co., Ltd..

Conclusion

A successful projector program is built less on flashy features and more on controlled engineering decisions that you can verify and defend. When you specify brightness with a reproducible method, validate throw ratio against real rooms, test autofocus and correction behaviors in messy setups, treat MEMC as a mode policy rather than a universal upgrade, and define OS stability in practical terms, you reduce the hidden costs that kill margin in B2B. The payoff is not just a smoother launch, but a product line that channels can sell confidently and support teams can sustain. If you’re sourcing for your brand, start with a portfolio view, then move quickly into acceptance criteria and customization discussions, because that’s where the real risk—and real value—lives. If you’re scoping an OEM/ODM program and want to align requirements, test methods, and delivery checkpoints, Contact Toumei for Project Discussion.

FAQs

The practical approach is to treat ANSI lumens as a test method rather than a marketing number. Ask for a defined measurement process and align on conditions such as image size, throw distance, ambient light, warm-up time, and picture mode. Then write pass/fail tolerances into the RFQ or purchase agreement so both sides can reproduce the result.

Throw ratio determines whether the projector fits the rooms your channel sells into. A mismatch creates immediate user frustration, even if brightness and image quality are strong. In B2B, throw ratio is a leading indicator of returns because customers can’t “fix” geometry with settings. A basic throw distance calculator, used during SKU planning, prevents most room-fit problems.

Test speed, repeatability, and stability under conditions that mirror real customers. Move the projector between distances several times, vary ambient light, and use different wall surfaces. Watch for hunting behavior and measure how quickly readable focus is achieved. For procurement, convert results into acceptance thresholds, such as refocus time and legible text standards at a defined screen size.

Not always. MEMC can make sports and fast motion look smoother, but it may introduce artifacts and can affect latency depending on implementation. The safest policy is to treat MEMC as a selectable mode, positioning it for content where smoothness is valued, while keeping a low-latency mode available for interactive or responsiveness-sensitive scenarios.

Use written, testable requirements. Define brightness acceptance criteria, throw ratio fit targets, autofocus and correction behavior thresholds, OS stability expectations, and a clear sample-to-mass-production validation flow. When those items are measurable and aligned early, project discussions become faster, and later-stage arguments become rare.